Ability, Capability & Capacity (3C)

This report assesses the load-bearing strength of the organization using the 3C lens: Ability, Capability, and Capacity. It is not only about whether people know and can do things (Ability), but whether the organization can repeatedly produce outcomes through stable practice (Capability), and whether it can scale and sustain performance under change (Capacity).

A. What we measured

The 3C indices are derived from the Streams × Blocks matrix by clustering cells into three layers of strength:

- Ability: foundational readiness—knowledge and skill availability and the baseline potential to perform.

- Capability: repeatable performance—how reliably ability converts into task performance and contextual outcomes.

- Capacity: scalable resilience—whether the organization can sustain and expand performance under complexity and change.

All indices are on a 0–5 scale. Working maturity threshold reference used for interpretation: 3.0.

B. What we found (data)

B1. 3C scoring extraction

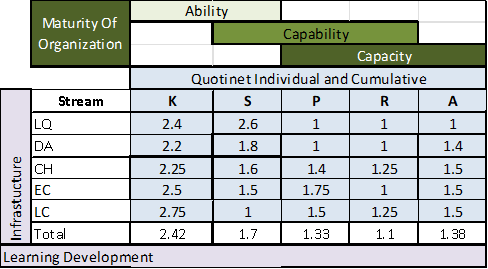

Figure: 3C extraction view

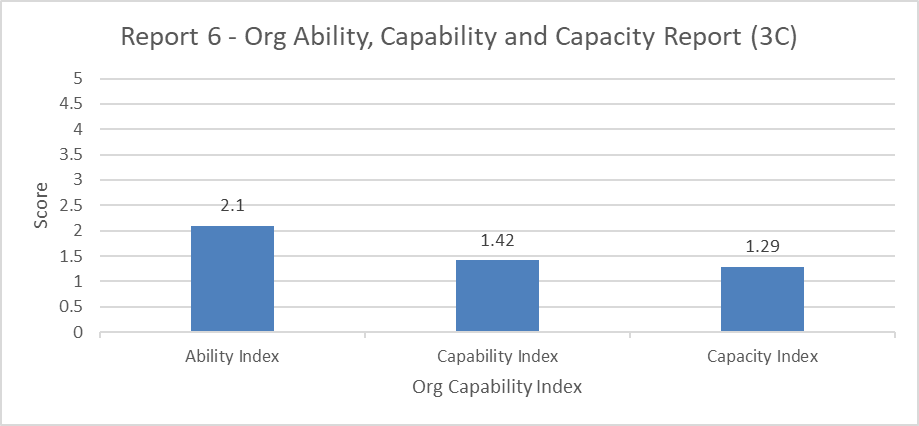

B2. 3C index scores

| Shared Space | Score | Gap to 5.0 |

| Ability Index | 2.10 | 2.90 |

| Capability Index | 1.42 | 3.58 |

| Capacity Index | 1.29 | 3.71 |

B3. 3C chart

Figure: Org Ability, Capability and Capacity (3C)

B4. Supporting matrix values (Streams × Blocks)

| Stream | K | S | P | R | A | Stream Score (avg K-A) |

| LQ | 2.40 | 2.60 | 1.00 | 1.00 | 1.00 | 1.60 |

| DA | 2.20 | 1.80 | 1.00 | 1.00 | 1.40 | 1.48 |

| CH | 2.25 | 1.60 | 1.40 | 1.25 | 1.50 | 1.60 |

| EC | 2.50 | 1.50 | 1.75 | 1.00 | 1.50 | 1.65 |

| LC | 2.75 | 1.00 | 1.50 | 1.25 | 1.50 | 1.60 |

Headline reading

Ability (2.10) is materially higher than Capability (1.42) and Capacity (1.29). This indicates the organization has some foundational readiness—people can access knowledge and develop skills—but struggles to convert that readiness into repeatable performance (capability) and even more to sustain and scale it (capacity).

C. Interpretation (patterns and meaning)

C1. The ‘readiness without conversion’ profile

A typical 3C pattern is that Ability leads first. However, when Capability and Capacity lag significantly, it signals that learning and enablement are not embedded into the operating system. The organization may train and inform, but it does not consistently build repeatable execution, nor does it have a strong system for scaling good practice across teams and functions.

C2. What this looks like in practice

- Teams can perform when strong individuals or managers drive it, but performance varies widely across units.

- Skill development exists, but on-job proficiency validation is inconsistent.

- Improvements occur as projects or pilots, but they do not become standard work at scale.

- Under pressure (change, volume, disruption), performance drops because routines and governance are not resilient.

D. Likely root causes (diagnostic hypotheses)

Given Ability > Capability > Capacity, the most probable causes to test are:

- Weak proficiency architecture: ‘what good looks like’ is not defined as observable standards by role/task.

- Insufficient deliberate practice: practice time, repetition, and feedback are not designed into work rhythms.

- Limited governance and measurement: lead/lag indicators are not reviewed consistently to drive improvement.

- Scaling mechanism is thin: playbooks, coaching kits, and replication forums do not exist or are not used.

E. What to do next (Arena-first vs Pathway activation)

Because Capability and Capacity are below threshold, the next cycle should be Arena-first: build scaffolds that make performance repeatable and scalable. Once conversion is stable, activate pathways by scaling and replicating across functions and layers.

E1. 90-day move set to lift Capability

- Define proficiency standards for priority roles/tasks; publish rubrics/checklists used in coaching and evaluation.

- Introduce on-job validation: observation, task checks, micro-certification for critical tasks.

- Embed weekly practice and feedback loops into team rhythms; managers coach to standards, not opinion.

-

E2. 90-day move set to lift Capacity

- Create a replication pipeline: document successful practices as playbooks; run cross-team share-outs to replicate.

- Establish a sensing-and-response routine: capture signals → experiment → learn → standardize into BAU.

- Strengthen governance: monthly review of capability metrics, resource allocation, and constraint removal.

-

E3. Measures to track (next cycle)

- Ability: role clarity and content readiness; skill certification rates.

- Capability: % validated proficiency cycles; uplift in role-level task KPIs; reduction in variance across teams.

- Capacity: % pilots scaled into BAU; time-to-replication; resilience metrics during peak or change periods.

-

F. Data note

This report uses the 3C index values shown in your reference chart as the reporting truth (Ability 2.10; Capability 1.42; Capacity 1.29). The worksheet extraction view is included to show how the indices are conceptualized from the underlying matrix.

Ready to get started?

Get in touch now!