Organization Quotient

This report reads the organization vertically across the five Blocks—Knowledge (K), Skill (S), Performance (P), Revenue/Context (R), and Agility (A)—using the Streams × Blocks matrix. The intent is to identify where learning is accumulating (inputs) versus where it is converting into outcomes (performance and business impact).

A. What we measured

The Organization Quotient is expressed through five block scores derived from five learning streams (LQ, DA, CH, EC, LC). Each cell is scored on a 0–5 scale. The block averages indicate maturity at each stage of the learning-to-performance pathway:

- Knowledge (K): availability and clarity of role-relevant knowledge.

- Skill (S): ability to apply knowledge through practice and skill-building.

- Performance (P): consistent execution quality and proficiency in real work.

- Revenue/Context (R): conversion of performance into contextual business impact.

- Agility (A): adaptability, resilience, and capacity to respond to change.

Reference maturity threshold used for interpretation: 3.0 (you can change this to your chosen target line).

B. What we found (data)

B1. Streams × Blocks matrix (with Stream Index)

| Stream | K | S | P | R | A | Stream Score (avg K-A) |

| LQ | 2.40 | 2.60 | 1.00 | 1.00 | 1.00 | 1.60 |

| DA | 2.20 | 1.80 | 1.00 | 1.00 | 1.40 | 1.48 |

| CH | 2.25 | 1.60 | 1.40 | 1.25 | 1.50 | 1.60 |

| EC | 2.50 | 1.50 | 1.75 | 1.00 | 1.50 | 1.65 |

| LC | 2.75 | 1.00 | 1.50 | 1.25 | 1.50 | 1.60 |

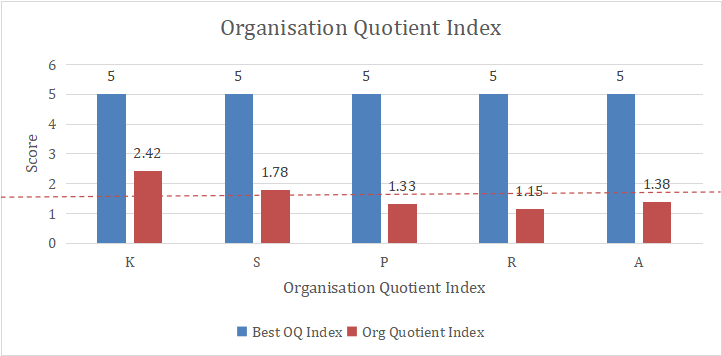

B2. Block averages (Organization Quotient)

| Block | Average | Gap to 5.0 |

| K | 2.42 | 2.58 |

| S | 1.70 | 3.30 |

| P | 1.33 | 3.67 |

| P | 1.10 | 3.90 |

| A | 1.38 | 3.62 |

Headline Reading

Knowledge is relatively stronger (K=2.42) and Skill is moderate (S=1.70), but maturity drops sharply at Performance (P=1.33) and Revenue/Context (R=1.10). Agility remains low (A=1.38), indicating limited system-level adaptation and resilience.

C. Interpretation (patterns and meaning)

C1. The conversion break

The primary pattern is a conversion break: learning exists as inputs (K, S) but does not reliably convert into repeatable performance (P) and contextual impact (R). In practice, this usually shows up as one or more of the following:

- Training completion without on-job proficiency validation.

- Inconsistent manager coaching and feedback loops.

- Weak linkage between learning activities and operational metrics (lead/lag).

- Knowledge captured, but not translated into task playbooks and standard work.

C2. Stream-level shape (why all streams look similar)

Stream Index values are tightly clustered (≈1.48–1.65). This indicates the limitation is not isolated to a single stream; it is systemic. When the block progression is weak (especially P and R), every stream average gets pulled down, producing a narrow band of low maturity across the landscape.

D. Likely root causes (diagnostic hypotheses)

Based on the score shape (K/S higher; P/R/A low), the most probable causes to test are:

Insufficient deliberate practice architecture: practice opportunities, repetition, and feedback are not designed into work.

Limited proficiency measurement: ‘good performance’ is not operationalized as observable standards per role/task.

Enablement not integrated with rhythms: learning is not embedded into reviews, cadences, and operational governance.

Analytics spine is thin: data capture exists in pockets but does not close the loop between learning inputs and outcomes.

E. What to do next (Arena-first vs Pathway activation)

Use the same rule across all blocks: if a score is below the threshold, build scaffolds first (Arena). If a score is at/above the threshold, scale what already works (Pathway).

E1. Arena-first priorities (scores below threshold)

- K (avg 2.42)

- S (avg 1.70)

- P (avg 1.33)

- R (avg 1.10)

- A (avg 1.38)

-

E2. Recommended interventions (90-day moves)

- Build the Performance scaffold (P): define proficiency standards by role/task; introduce on-job validation (observation, task checks, certification).

- Build the Revenue/Context scaffold (R): map performance indicators to business outcomes; define lead/lag measures and run monthly reviews.

- Strengthen the Agility scaffold (A): create a sensing-and-response routine (issue capture → experiment → learn → standardize) at function level.

- Convert Knowledge into task playbooks (K→S): restructure content into ‘standard work’ + ‘tacit tips’ + ‘common errors’ for execution.

-

E3. Measures to track (leading and lag indicators)

- Leading: % roles with defined proficiency standards; % employees completing validated practice cycles; coaching cadence adherence.

- Lag: improvement in role-level performance indicators; reduction in variance between teams/functions; uplift in P and R block scores next cycle.

- Analytics: completeness of data capture across participation → practice → proficiency → outcomes.

-

F. Data note (for consistency)

The ‘Total’ row shows K=0.48 while the other totals align with the block averages. Based on the stream values, the calculated Knowledge average is K=2.42. This suggests the K ‘Total’ cell may have a formula or reference issue. This report uses the block averages computed from the stream rows shown above.

Ready to get started?

Get in touch now!